Alexa Blogs

A visual user interface can be a great complement to a voice-first user experience. Echo Show enables you to extend your voice experience to show more information or enable touch interactions for things like picking from a list and watching videos. In this blog post, we’ll walk you through how to build an Alexa skill using the core developer capabilities of Echo Show.

Alexa Skills on Echo Show

All published Alexa skills will automatically be available on Echo Show. Skills will display any skill cards you currently return in your response objects. This is the same as how customers see your Alexa skill cards on the Alexa app, Fire TV, and Fire Tablet devices today. If no skill card is included in your skill’s response, a default template shows your skill icon and skill name on Echo Show. To improve the experience of your skill for Echo Show, you should update it to make use of the new display templates. See the updated voice and graphic guide for more information.

Types of Customer Interaction with Your Skill

The custom skills you have designed for Echo Show must take the following interactions into account:

- Voice: Voice remains the primary means of interacting with Alexa, even on the Echo Show device. If your skill requires a screen to be used effectively, you should create a voice interaction that tells customers on non-screen devices how to interact.

- Alexa app: Your custom skill may display a card with more information in the Alexa app. If the custom skill is used with an Echo Show device, the card automatically appears on the screen. However, if you update your skill with templates for the Display Interface, templates will take precedence and cards will not be displayed.

- Screen display: If your custom skill uses the new display templates, you will need to pair the correct interaction with the correct display template based on the type of items you want to show.

- Screen touch: You may also enable your skill to respond when a customer touches an item on screen. You can use a custom select intent and use action links to create a voice- and touch-interaction experience on body templates (for example, displaying a recipe that corresponds to the selected item on a list of recipes). Also, the list templates are inherently selectable via touch (no action links required).

Displaying Visual Information in Your Alexa Skill

When you develop a skill, you can choose whether or not to support specific interfaces, such as the display interface for screen display. You will want to create the best possible experience for your customer, regardless of which type of device they have. Even if the screen experience is not the focus of your skill, you should still consider what type of experience you are creating on those devices. The good news is that even if you take no steps to support screen display, the cards you provide for the Alexa app will be displayed on a screen device (Fire Tablet, Fire TV, Echo Show, and AVS-enabled devices that support display cards). If you want to take full advantage of the options provided by Echo Show, such as the ability to select a particular image from a list or play a video, then you must specifically support the Render Template directive in your code.

Supporting Voice and GUI Device Scenarios

The best way to support both voice-only and multimodal device scenarios is to have your skill check for a device’s supported interfaces and then render the appropriate content. In general, the customer will respond to a skill in different ways based on whether the customer is using Echo Show or Echo. On Echo Show, your skill can accomplish this by parsing the value of event.context.System.device.supportedInterfaces.Display in the Alexa request which will indicate the supported interfaces. In the following example, parsing this JSON-formatted sample request indicates that supportedInterfaces includes AudioPlayer, Display, and VideoApp. If any of these is not listed as a SupportedInterface, that would mean that the unlisted interface is not supported. See the Alexa Cookbook for a node.js example that does this.

Your skill service code needs to respond conditionally both to the case where these interfaces are not supported or are supported, such as Display.RenderTemplate on an Echo (screen-less) device, and an Echo Show device.

Below is an example showing when a display is supported (notice event.context.System.device.supportedInterfaces.Display). You can also see examples of complete LaunchRequests for when a display is supported, when a display is not supported, and when the request is from the simulator in the Alexa Cookbook.

{

"context":{

"device":{

"supportedInterfaces":{

"Display":{},

"AudioPlayer": {},

"VideoApp":{}

}

}

}

}

Enabling Templates in Your Skill

The Alexa Skills Kit provides two categories of display templates, each with several specifically defined templates for display on Echo Show:

- A body template displays text and images. The images on these templates cannot be made selectable. Text can be made selectable through use of action links.

- A list template displays a scrollable list of items, each with associated text and optional images. These images can be made selectable, as described in this reference.

These templates differ from each other in the size, number, and positioning of the text and images, but each template has a prescribed structure you can work within. When you, as the skill developer, construct a response that includes a display template, you specify the template name, text, and images, and markdown like font formatting and sizes, so you have latitude to provide the user experience you want.

The Echo Show screen interactions are created with the use of these new templates for the Display interface.

1. On the Skill Information page for your (new or existing) skill, in the Amazon Developer Portal, select Yes for Render Template. Note that you can also select Yes for Audio Player and Video App support, if you want those to be part of your skill. Display.RenderTemplate is the directive used to display content on Echo Show.

2. On the Interaction Model page, you can choose whether to use Skill Builder, or the default page, for building your interaction model.

- If you use the default Interaction Model page, in the Intent Schema, include the desired built-in intents for navigating your templates in your intent schema, as you would if creating a voice-only skill. Ensure you include the required built-in intents.

- If you select Skill Builder, note that some of the built-in intents are already included. You should include whatever other intents that your skill requires.

3. In the service code that you write to implement your skill, implement each of these specified built-in intents as desired. Include the Display.RenderTemplate directive in your skill responses to display content on screen as appropriate, just as you would include other directives, as shown in the examples below.

Using the Display Interface Templates

To display interactive graphical content in your skill, you must use display templates. These templates are constructed so as to provide flexibility for you. For each of these templates, the strings for the text or image fields may be empty or null; however, list templates must include at least one list item.

The skill icon you have selected for the skill in the Amazon Developer Portal appears in the upper right corner of every template screen automatically for you, and is rescaled from the icon images provided in the developer portal. You can change this skill icon in the portal as desired.

Each body template adheres to the following general interface:

- Body Template Interface

{

"type": string,

"token": string

}Where the type is “RichText” or “PlainText” and the token is a name that you choose for the view.

Each list template adheres to the following general interface:

- List Template Interface

{

"type": string,

"token": string,

"listItems": [ ]

}

Where the type is “RichText” or “PlainText” and the token is a name that you choose for the view.

The template attribute identifies the template to be used, as well as all of the corresponding data to be used when rendering it. Here is the form for an object that contains a Display.RenderTemplate directive. The type property has the value of the template name, such as BodyTemplate1 in this example. See also the Alexa Cookbook for a code sample using bodyTemplate.

The other template properties will differ depending on the template type value.

{

"directives": [

{

"type": "Display.RenderTemplate",

"template": {

"type": "BodyTemplate1",

"token": "CheeseFactView",

"backButton": "HIDDEN",

"backgroundImage": ImageURL,

"title": "Did You Know?",

"textContent": {

"primaryText": {

"type": "RichText",

"text": "The world’s stinkiest cheese is from Northern France"

}

}

}

}

]

}

For context, see Display and Hint directive for and example response body that includes multiple directives.

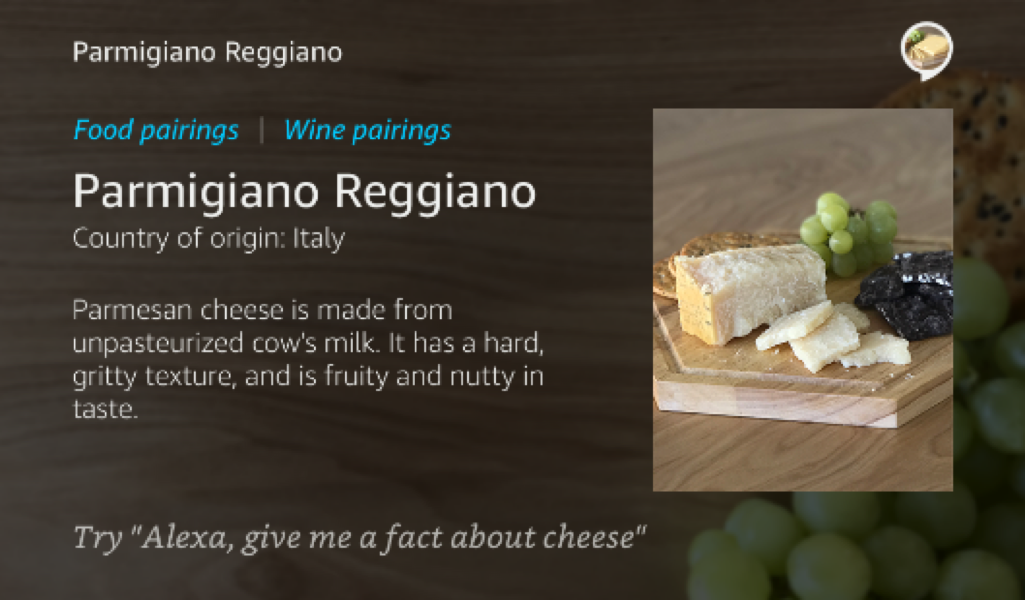

Here is another example using BodyTemplate2 that will display the title “Parmigiano Reggiano”, the skill icon at the upper right, and an image at the right, with the image scaled, if needed, to the appropriate size for this template. The back button, title, background image, and hint text are optional.

"directives": [

{

"type": 'Display.RenderTemplate',

"template": {

"type": "BodyTemplate2",

"token": "CheeseDetailView",

"backButton": "HIDDEN",

"backgroundImage": https://www.example.com/background-image1.png,

"title": "Parmigiano Reggiano",

"image": https://www.example.com/parmigiano-reggiano.png,

"textContent": {

"text"="Parmigiano Reggiano

Country of origin: Italy

Parmesan cheese is made from unpasteurized cow’s milk. It has a hard, gritty texture, and is fruity and nutty in taste.",

"type" = "RichText"

}

}

} ,

{

"type": "Hint",

"hint": {

"type": "PlainText",

"text": "search for blue cheese"

}

}

]

For more details on the new Display Interface Templates please check out our Display Interface Reference.

Playing Video with the Video App Interface

The new VideoApp interface provides the VideoApp.Launch directive for streaming native video files. Your skill can send the VideoApp.Launch directive to start the video playback. Your skill can send a play directive in response to a voice request, or in response when a user taps an action link on the template.

To use the VideoApp directive for video playback, you must configure your skill as follows:

- Indicate that your skill implements this interface when configuring the skill in the developer portal. On the Skill Information tab, set the Video App option to Yes. Set the Render Template option to Yes as well, because your skill will likely contain other screen content (note: you do not have to use the Render Template directive along with Video App but you would if you want to present a list of videos).

Note: When your skill is not in an active session but is playing video, or was the skill most recently playing video, utterances such as “stop” send your skill an AMAZON.PauseIntent instead of an AMAZON.StopIntent.

The VideoApp interface provides the VideoApp.Launch directive, which sends Alexa a command to stream the video file identified by the specified videoItem field. The source for videoItem must be a native video file and only one video item at a time may be supplied.

When including a directive in your skill service response, set the type property to the directive you want to send. Here is an example of a full response object sent from a LaunchRequest or IntentRequest.

In this example, one native-format video will be played.

{

"version": "1.0",

"response": {

"outputSpeech": null,

"card": null,

"directives": [

{

"type": "VideoApp.Launch",

"videoItem":

{

"source": "https://www.example.com/video/sample-video-1.mp4",

"metadata": {

"title": "Title for Sample Video",

"subtitle": "Secondary Title for Sample Video"

}

}

}

],

"reprompt": null

},

"sessionAttributes": null

}

For more details on VideoApp controls and playback you can check out our VideoApp Interface Reference.

New Built-In Intents for Echo Show Interactions

Built-in intents allow you to add common functionality to skills without the need for complex interaction models. For Echo Show, all of the standard built-in intents are available, but we have added additional built-in intents as well.

These include built-in intents that are handled on the skill’s behalf, as well as built-in intents that are forwarded to the skill and must be managed by the skill developer (such as navigating to particular template).

| Skill Developer Handles Intent? | Intents for Echo Show | Common Utterances |

| Yes |

|

|

| No |

|

|

For more details on these new intents, check out the built-in intents for Echo Show documentation.

You may also want to check out these Alexa Skills Kit resources:

- Get Started with Skills for Echo Show

- Sample Showing How to Build a BodyTemplate

- Best Practices for Echo Skill Design

- Display Interface Reference

- Built-In Intents and Selection for Echo Show

- VideoApp Interface Reference

- Alexa Dev Chat Podcast

- Alexa Developer Forums

Build a Skill, Get an Echo Dot

Developers have built more than 15,000 skills with the Alexa Skills Kit. Explore the stories behind some of these innovations, then start building your own skill. If you’re serious about getting into building voice UIs, we’d like to help you explore. If you publish a skill in June, we’ll send you an Echo Dot so you can experiment and daydream.